10 no-compromise steps to fast webpage delivery

I have two active sites. This one, and law191.org – my community immigration law office site. I keep them separate because I am not writing a splog. I need this blog space to write about anything and everything without worrying whether clients will approve or disapprove or whether it will affect my search position.

This post is about improving google’s assessment of my office site’s performance from embarrassing to the high 90s. As an immigration lawyer, being found in search is very important. As a low-fee community lawyer, being found organically is the only game in town because high-stakes advertising isn’t in my budget.

Skip to the suggestions now – or read the background.

I did this work now (rather than in a few weeks) so that I could separate two factors in search position – performance quality and location. I am about to open a second office that will give me prime location. And before that happens, it seemed like a good idea to confront the performance issue.

Metrics matter. It is so easy to do stuff on the internet because it seems like a good idea, or someone has said that a magic incantation appeases the great god of Mountain View. So what is the baseline?

I usually ask clients how they find me, and have come to the conclusion that it is through location search. Yes I know there is a whole other demographic who discover you through content search – I get a lot of those from the Avvo Q&A for example – but since the majority who come from the internet and not from referrals use location, I test my site for location queries constantly.

Before this work I was at the point where I liked my site content. The metric is that people who saw it made a point of saying so and offering small tweak ideas. I got to this point after many iterations, and learning that an effective site isn’t about me, but about the reader and her viewpoint. So I’m not going to talk about site content except to say that I didn’t want to compromise on content to improve performance.

Local Search (find a lawyer)

When people search for me by city or county – my immigration law practice in in San Rafael, in Marin County – I am comfortably on page 1. People who need to find me, can. When the phone rings, it is mostly locals.

But in the wider Bay Area, local should not be too narrow. For example, because of bridges, I am only 20 minutes from the East Bay. Someone on the other side of the water is as close as someone from just up the freeway. Yet if people search for a Bay Area lawyer, I have been invisible. Through content improvement I went from lost in the long tail to position #50 in search, and there I stuck.

This may not be typical. To see where you sit in the local v. not quite local search you need to look at how Google, Yelp and the others handle demographics. They use the Nielsen TV audience demographic areas – obviously they do, since they hired old-fashioned marketing folks for this side of their business. And the big hint that this is how it is done comes if you have ever used adwords and tried to target ads to particular localities. Nielsen do not see the “Bay Area” as I do, or as a crow might. It sees the “Oakland, San Francisco and San Jose metropolitan area” as one demographic. And Marin County as a strange land across the Golden Gate bridge. And in many ways, that reflects a general prejudice that Marin is separate.

I win on local search because it is a small market. I lose big time on slightly wider search because I am not local. According to Google.

At least I think so. And I have tried this for many local service searches – from Mexican restaurants to plumbers. Marin isn’t in the Bay Area. When a Marin business does make it in Bay Area search, it seem to have another office/store – in the Bay Area demographic. But there is one exception I have found – a San Rafael endodontist. Maybe he has a perfect score in the backlinks department.

Because I have seen how the few who find me from the “Bay Area” do the pilgrimage to San Rafael – it can be a long drive from San Jose, I am opening an Oakland office soon. And that should have a major effect on search. But before then, I want to see if non-content quality has any benefit.

One last comment. I chose a very clean and responsive design that works well on mobile devices as well as computers. Here are desktop and mobile views of the site:

The google tools tell me I am mobile-ready. But I don’t see any more visibility because of it.

Improving Website Performance

The site had been pretty mediocre in terms of performance, but I suppose that happens when you focus on content. Also because I like the modern “scroll down” mobile-style site with 3 or 4 sections (rather than links to other pages) then the main page has a lot in it. Also, because I use https – I figured it was a good idea for a site that might involve confidential interaction at some point – the security overhead amplifies the poor performance.

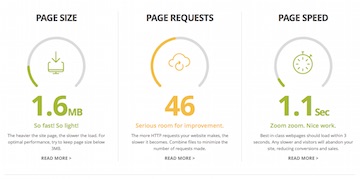

I started with a link from a “10 tools for SEO” list (why are lists so compulsive?) that led me to “Website Grader” – this analyzed my page performance in a very encouraging. I had “room for improvement” – which is a polite way of saying my site sucked.

The “page speed” was more optimistic than the timing results from other similar online performance tools. But my big problem was the 46 page requests, on a site that also uses HTTPS. That’s terrible for a single webpage.

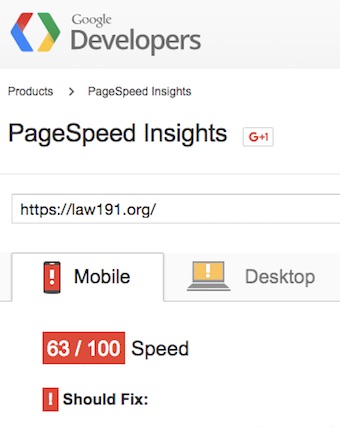

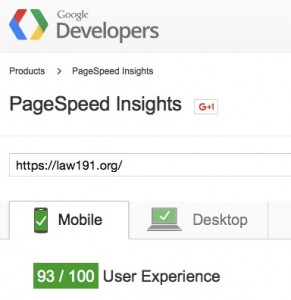

I moved on to Google’s Pagespeed Insights and found that law191.org was “in the red” for mobile performance, and only a little better for the desktop.

After all the steps below, with ZERO change to the content of the page – no compromises – I am at 93% mobile, 99% desktop.

What do you need for these steps? Three answers – some are specific to the sort of website I have, for example I use the Bootstrap framework in a very simple way. Second, I write my own web content, so it is easy for me to “inline” small amounts of style or script code. Third, I have a very friendly and responsive web hosting service, and I have full access to my site including .htaccess.

1. Bootstrap – customize the css

If you use Bootstrap, you will likely be using the minimized (.min.css) version of the style file. But it contains everything you might possibly want. I just use Bootstrap for the responsive grid system – I like to keep a single webpage that works on desktops and mobile devices – the top of page navigation, and the buttons.

Bootstrap provides a “customize and download” page. With trial and error, I discovered that I needed the jquery “Collapse” magic and the javascript “Component animations” so that my navigation bar continued to function. And most else I could exclude. This reduced the size of the bootstrap footprint by nearly 70%. I indulged myself by keeping the typography css.

2. Avoid jquery when it isn’t needed – go native

JQuery is everywhere on the internet. Sometimes it is necessary. Sometimes, it is what the developers are used to. Bootstrap javascript plugins “require” JQuery – and I need their javascript in order to collapse the navigation bar menu when the site is viewed on a mobile.

I found a project on GitHub that pandered to this prejudice of mine. The Native Javascript for Bootstrap package keeps my page running without JQuery. I chose CDN delivery (see 10 below) but a stripped down version is a project for later.

3. Merge, minimize (and inline) the CSS

Google tells you to merge small css and js files to reduce the network loading. I did. And I then went to the next step and inlined it all in the file. The last step is probably not necessary – not even a good idea. But it is nice to get a “green light” from google, even if I go back to separate css files.

4. Ask your ISP to use Keep-Alive

The next three steps are low-hanging fruit from your web hosting. First Keep-Alive. In the good old days (and on my hosted service until yesterday as well) each time your browser requested a file it opened a connection to the server, received the file and then closed the connection. And for small files, this open/close interaction is very expensive. Keep-Alive is a configuration that allows browsers to open a connection and then request a zillion files in the same session, and only close down after receiving all of them.

This isn’t something I could do. But my ISP was (as usual) very kind and very proactive, and I asked and had Keep-Alive on my site very quickly.

5. Set expire time for browser caching (htaccess)

The second server tweak is “browser caching.” This is when you tell a browser that some files won’t change each time you access the webpage – so they can safely use their cached version. This is a huge win for the second time someone comes to your webpage. So a returning client doesn’t eat up her 4G bandwidth when they want your phone number. Doesn’t help first impressions, but is worth doing.

I did this in my “.htaccess” file. Many ISPs give you access to this file. My ISP gives me a secure shell with access to many things.

Here is the code to add to the .htaccess file:

## EXPIRES CACHING ##

ExpiresActive On

ExpiresByType image/jpg “access 1 year”

ExpiresByType image/jpeg “access 1 year”

ExpiresByType image/gif “access 1 year”

ExpiresByType image/png “access 1 year”

ExpiresByType text/css “access 1 month”

ExpiresByType application/pdf “access 1 month”

ExpiresByType application/x-javascript “access 1 month”

ExpiresByType application/x-shockwave-flash “access 1 month”

ExpiresByType image/x-icon “access 1 year”

ExpiresDefault “access 2 days”

## EXPIRES CACHING ##

If you want browser caching while files are still being tweaked, you “fingerprint” them. If you use “picture.png” then you are stuck with whatever is in the browser cache. If you use “picture-01.png” then if you change the picture you can call the new one “picture-02.png”

6. Compress (.htaccess again)

The idea here is that if you have a 100k text file, the time it takes for the file to get the browser is far longer than if you have a 20k compressed version of the same file. And it also far longer than the time taken to compress and decompress at both ends. Win/win.

Images are usually compressed up the wazoo so you gain nothing having the server compress them too. But once your HTML file has grown through inlining of css and other tricks, then there is a big saving.

If your server allows for compression, you can add the following lines to your .htaccess to enable it.

# compress text, html, javascript, css, xml:

AddOutputFilterByType DEFLATE text/plain

AddOutputFilterByType DEFLATE text/html

AddOutputFilterByType DEFLATE text/xml

AddOutputFilterByType DEFLATE text/css

AddOutputFilterByType DEFLATE application/xml

AddOutputFilterByType DEFLATE application/xhtml+xml

AddOutputFilterByType DEFLATE application/rss+xml

AddOutputFilterByType DEFLATE application/javascript

AddOutputFilterByType DEFLATE application/x-javascript

# Or, compress certain file types by extension:

<files *.html>

SetOutputFilter DEFLATE

</files>

7. Use a CSS sprite for all the tiny pictures

Whatever optimization can be done by the server, there is no getting away from the fact that my page design uses a lot of small pictures. I don’t know whether they improve the content, but the eye-candy does break up the turgid text that an immigration lawyer might write for a potential client.

I could see two solutions – data URLs and CSS sprites. For a small icon-like picture (and most of my pictures were 10-12k jpegs) you can lose the problem of image handling completely by setting the source URI of the “img” tag to be an inline encoding of the image – a data URL. I did try a nice online image to data converter and it works as advertised.

However, I then read a post warning against using data URIs on mobile devices. The logic is that a data URI is processed every time you refresh the page. But an image can be cached in the browser. No idea if this is right or wrong, but I moved on.

CSS Sprites sound magical. But the idea is simple – and it is like a contact print. Put all of your images in a single large image file, and then write some CSS styling that lets you render just your segment of the larger image at just the right point. I looked at a number of web guides and “tools” to help and they were way too complicated for late Saturday night after a long week. And was about to give up when I found SpritePad.

So. I have over 20 pictures, most small, a few not so small. Even as compressed jpgs they are still about 300k of data. Spritepad gives you a canvas in a webpage, and you can then drag files from your desktop (at least on the mac straight from the finder) onto the canvas and then re-arrange them so they do not overlap. Here’s what I ended up with.

SpritePad gives you two free projects (at the moment). You download two files – a png contact-sheet of all the images and a .css file to use in your application.

Each image is a CSS class that you can use with an element in your HTML page. When you use the class, it puts the image as the background of the element. At first I simply set the class of each image. But then found that with no “src” attribute (because I no longer needed one) the image put out its “alt” text. I considered simply making src=”” but then found some horror stories of (mostly older) browsers that don’t treat this sensibly. So I replaced my images with “<div>” and changed the “alt” tag to a “title.”

I wonder if this might be part of the reason for the search ranking result (see end). Google may take “alt” text into consideration more than div titles.

But I was amazed that it actually worked, and as a result of the sprite and the file merging, my page requires 4 rather than 48 files.

Sprite images seem to work fine – particularly as I can nominate a non-sprite version of an image in my open-graph metadata for facebook and others to take.

8. Use a PNG compression tool

The one down-side of my use of SpritePad was that instead of 20 files totaling 300k, I now had one PNG file close to 2M in size.

I used the TinyPNG online compressor, and the panda saved me 75% – the PNG file is 500k, not 300, but that is the only real downside.

I suspect that if I had used simple PNG images rather than supercompressed jpgs when I created my sprite PNG, then overall compression at the end might have been higher. Something to try another weekend.

9. Use (SVG and) static buttons for social media – no plugins.

At this point, all looked really good and fast. So I enabled Facebook, Google+ and Twitter share buttons and everything fell apart. All of my hard work gone. Back to being a bloated slow-loading page.

As a low-fee lawyer, any way of referring my services to people who need them is important. I don’t want to put my fees up to cover advertising costs. You learn a lesson early on that unless you have good outreach, you are invisible. You might as well not even come into the office. And no, non-profit agencies don’t refer people to you – they need clients for their waitlists. And government services don’t refer either because there are consequences in referring to someone who might do more harm than good – and only non-profits are seen as being regulated in their do-gooding. So, social media is very important for the independent solo social entrepreneur.

But social media is also a dilemma. The plugins are active. They watch. They track who is coming to my site. And for a lawyer who needs to respect the confidentiality of their potential clients, that is a big NO.

So I needed to take control I needed static social media buttons that know nothing – unless the person who shares is prepared to tell them.

I found some nice work on scriptless social media buttons and this all worked fine – except that it relied on an extra font download to produce the facebook “f” the twitter-bird and the curly google G. And that download pushed my google performance test back out of the super green and back to the orange “could do better.”

It would be easy to find 3 small icons – and add them to my sprite sheet, or even produce data URIs for them. Too easy. But I am a consumer. And I found some labor of love social media button designs in SVG by a very talented guy called Matt. And you know how it is when you see something you gotta have? The feeling you used to get when you went to the Apple Store? Well I had to have these buttons, and they were straightforward to extract from his package and use. And SVG+CSS means NO FILES NEEDED.

10. CDNs

CDNs are “content delivery networks.” Copies of a file are kept in many places, and when a user needs it, she gets the one that is internet-geographically closest to her, and hopefully far faster than the one you might serve from your home server.

I only used one CDN offering here – the javascript for the native (JQuery free) Bootstrap support. But decentralizing, IFPS and the idea of a distributed internet based on content is the Next Big Thing, and it will make many of the performance issues we worry about while serving our pages seem very old-fashioned… you did WHAT Grandpa?

And the very exciting result….

And the very exciting result from google search to my extra responsive, extra fast webpage is….. that I am down from position #50 to #57. Ah well, I can see the reasoning – anyone offering a lawyering service who spends this much time on his website shouldn’t appear high in search. So sad, too bad. If there is something in my list of performance improvements that triggered this hit, please let me know.

At least clients benefit. And the fast response for the page means that people might actually get to read it if they are far away. And while I said that low-fee lawyers rely on organic search, that is not quite true. We also rely on referrals, and one powerful referral is through social media. The more people who see the site and like it, the more who will “share” it with their friends in case one of them needs a low-fee community immigration lawyer. Maybe one of your friends does, so I hope you shared if you visited law191.org while reading this post.

Leave a Reply